Scottish inventor John Logie Baird (1846-1946) was a pioneer in video processing, inventing the television, of which a working version was first devised in 1926. His methods for displaying a stream of video were by using mechanical picture scanning with an electronic transmitter and receiver.

Baird with his invention, the first television

An old idea referred to as Persistence of Vision suggested that an after image persisted in the retina for one twenty-fifth of a second on the retina. This has since been dispelled and is now regarded as a myth as it's no longer considered true that a human perceives image as the result of persisted vision.

Describing motion perception is probably more accurate and relatable by the following two definitions: Phi phenomenon: The optical illusion of the perception of continuous motion between separate objects viewed in rapid succession and beta movement: The optical illusion that fixed images appear to move, despite the image not moving.

When a user is exposed to images at a rate of more than four a second, to the human eye the impression of movement is given off.

Storage

For videos, copious amounts of storage is needed for certain types of files. The largest type that springs to mind would be Uncompressed HD Videos. Storing one could typically use approximately 1 gigabyte every 3 seconds. This estimation is based on the file needing 3 bytes per pixel, with a resolution of 1920 x 1090 by 60 frames per second. This totals roughly 373.2 megabytes per seconds.

Even in today's world of technological advancements, that amount of storage is far too bulky and awkward. In order to combat this, there are a large variety of compression algorithms and standards which can significantly reduce data usage in the storage, processing, streaming and transmission of videos.

Video Processing Vital Terminology:

Bit rate: How many bits per second are used to represent the video portion of the file. These vary vastly, typically from 300-8000 kbps. As expected, the lower bit rate represents a lower quality of video, similarly to sound files.

Interlacing or Progressive Video:

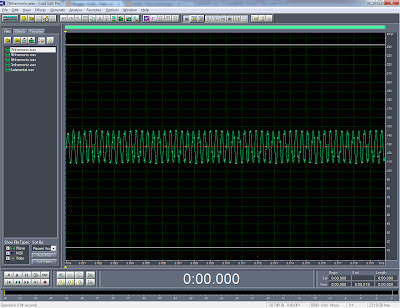

Interlaced video is the best method to make use of limited bandwidth for video transmission, especially when analogue transmissions were prominent. The viewer is tricked by the receiver, drawing the odd number lines twenty five times per second. After this, the even lines appear in the next frame and the process is repeated. Progressive video does not do this, avoiding interlacing and as a result appears much sharper.

Resolution:

Resolution is the number of pixels by pixels needed to represent an image. At first, in the analogue days, video was represented by a resolution of 352 x 240 in North America and 352 x 288 in Europe. Advancements mean that today, high definition television can be represented by 1920 x 1200 pixels. The movement away from analogue signals has meant televisions can now produce blu-ray quality and at times even double up as a computer monitor.

This image shows a scale of definition, with the highest to the left

Video File Formats

MPEG-1:

- Development started in 1988 and finalised in 1992, when the first MPEG-1 decoder became available

- It could compress video to 26:1 and audio to 6:1

- The format was designed to compress VHS quality digital video and CD audio without comprising quality as far as possible.

- It is currently the most widely compatible lossy compression format in the world

- It is part of the same standard as the MP3 audio format

- MPEG-1 video and layer I/II audio can now be used in applications legally for free, as the patents expired in 2003, meaning royalties and license fees were no longer applicable

MPEG- 2

- Work began on this format in 1990, before MPEG-1 was written

- Its intentions were to extend the MPEG-1 format and use high bitrates (3-5 Mbits per second) to provide broadcast quality video in full.

MPEG- 4

- A patented collection of methods made to define compression of audio and video, creating a standard for a number of audio and video codecs. (coder/decoder)

- Shares many features with MPEG-1 and MPEG-2, whilst enabling 3D rendering, Digital Rights Management and other interactive features

Quick Time

- Was first on the scene in 1991, produced by Apple, beating Microsoft by a year in their attempts to add a video format to windows.

- Approved in 1998 by the ISO as the basis of the MPEG-4 file format

AVI (Audio Video Interleave)

- First seen in 1992, implemented by Microsoft as part of its Video for Windows technology.

- It takes the form of a file container, allowing synchronized audio and video playback

- Sometimes files can appeared stretched or squeezed and lose definition due to the files not containing aspect ration information which can lead to them being rendered with square pixels.

- However, certain types of software such as VLC and MPlayer have features which can solve problems with AVI file playback.

- Despite being an older format, using AVI files can be beneficial. Their continuation means they can be played back on a wide range of systems, with only MPEG-1 being better in that respect.

- The format is also very well documented, both by its creators Microsoft and various third parties.

WMV (Windows Media Video)

- Made by Microsoft with various proprietary codecs

- Files tend to be wrapped in Advanced Systems Format (ASF) and are not encoded

- The ASF wrapper is frequently responsible for supporitng the Digital Rights Management

- Files can also be placed inside an AVI container whilst based in Windows Media 9

- Can be played on PCLinuxOS while using software including the aformentioned VLC and MPlayer

3GP

- Two similar formats 3GPP (container for GSM phones such as T-Mobile) and 3GPP2 (container for CDMA phones such as Verizon)

- 3GPP files frequently carry a 3GP file extension, whereas 3GPP2 files carry 3G2 extensopms

- 3GP/3G2 store video files using an MPEG-4 format. Some mobile phones use MP4 to represent 3GP.

- This method decreases bandwidth and storage whilst still trying to deliver a high quality of video.

- Again, mentioning VLC and MPlayer, help Linux operating systems support 3GP files, they can be encoded and decoded with the method known as "FFmpeg".

FLV (Flash Video)

- File container used to transmit video over the internet

- Used by household internet names such as YouTube, Google, Yahoo and MetaCafe

- While this format is open format, the codecs used in production are generally patented

Video Quality versus Speed of Access

- The more compression, the more information is lost, leading to more distortion in picture

Algorithms that compress video still have problems with content which can be unpredictable and detail, the prime example being live sports evenets. Automatic Video Quality Assessment could provide a solution to this.