I was then left with the following wave, which by this point had made the speech far more coherent but the high pitch sound still remained. The improvement in the sound was achieved by applying various notch filters at 440Hz and 2dB.

I then continued to add notch filters as before but supplemented this by adding a hiss reduction, as well as lower tones and upper tones. There was a massive drone at the start of the sound and at the end, so I decided to significantly reduce the amplitude of both of these parts, by three decibels at a time, until they were drowned out sufficiently. The wave now appeared like this:

The next part of the lab was to make the speaker in the sound appear to be angry. I aimed to do this by making the beginning of each sentence louder, boosting the amplitude by 6 decibels. I also added a 6 decibel boost to last section of speech so the sound seemed to maintain being angry. I increased the angry tone, making it appear as if the speaker had raised their voice by also adding in an amplitude feature named fade in, and gave it a 10 decibel setting.

The next part of the lab was to make it sound like the sound had taken place in a church. I did this by adding a "reverb" delay effect and choosing the "large empty hall" option. As the name suggests, it gives the effect of being in an empty hall, which a church can often sound like. The speech sounds more bellowing and as if it may produce an echo.

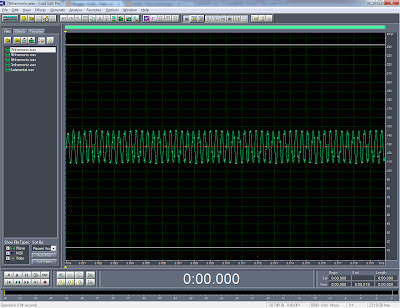

Finally, I had to incorporate a bell sound into the file. I downloaded a wav file from the internet which was a repeated church bell. I opened this file up in Cool Edit Pro and then selected "Copy" and "Mix Paste". After I performed this action the bells continued long after the speech, so I decided to cut that bit out, so the file wasn't too prolongued. The bells being added in not only gave the sound the desired effect, but also helped filter out the slight remaining high pitched sound. The final shot looked like this: